LLM-Generated SQL in Production: What Breaks and How to Validate It

.png)

TL;DR - Executive Summary

What we learned from 50,000+ production queries:

- Most errors are schema & join issues - Not syntax errors. Production failures are dominated by schema hallucinations (wrong table names, missing columns) and incorrect join paths (missing intermediate tables)

- Semantic layers dramatically reduce errors - As we showed in our NLQ architecture guide, accuracy jumps from ~55% to 90%+ with semantic context. Our evaluation data reveals exactly which error types this prevents

- Build cost vs platform: $50-80K first year (engineering time) vs 1-2 weeks integration with a platform

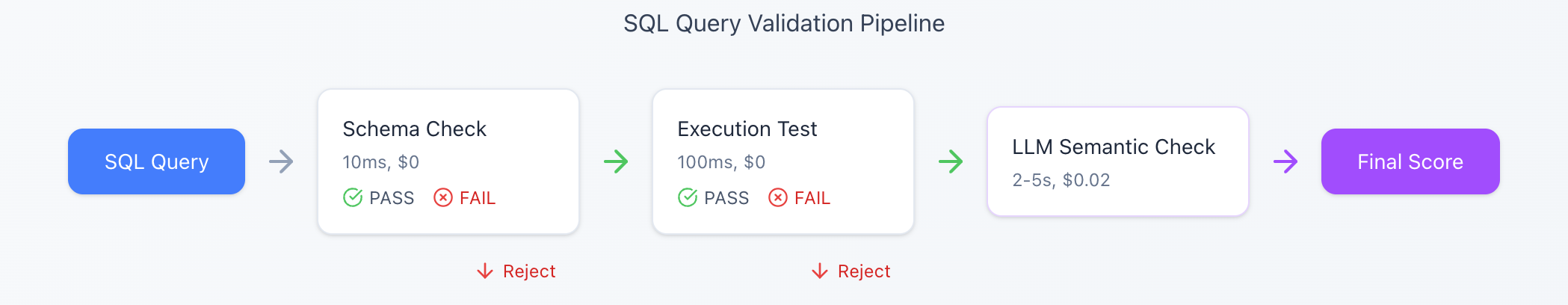

- Priority order: Week 1: Schema validation → Week 2: Execution testing → Month 2: LLM-as-a-Judge semantic validation

This guide complements our previous posts:

- Why LLMs fail at SQL → Explained the theoretical problems

- NLQ pipeline architecture → Showed how to build reliable systems

- This post → Shows how to validate and measure SQL correctness with code

Quick decision framework:

- Start with schema validation (catches most errors with minimal code)

- Add semantic layer before building complex evaluation

- Use LLM-as-a-Judge for semantic correctness, not syntax

- Consider build vs buy based on your scale and timeline

Most guides on SQL evaluation read like textbooks. This one doesn't.

At DataBrain, we've processed over 50,000 NL-to-SQL queries across hundreds of customer databases in production. We've seen every failure mode, tried every validation approach, and learned what actually matters vs what sounds good in theory.

How this guide builds on our previous work:

- In our LLM limitations post, we covered why LLMs struggle with SQL generation

- In our NLQ architecture guide, we showed how to build reliable NL-to-SQL systems

- This post shows you how to validate and measure SQL correctness with code examples, production patterns, and a prioritization framework

The reality? Most SQL validation advice is wrong about priorities.

Teams waste months building complex logical equivalence testing when most of their errors are simple schema hallucinations that could be caught in a few lines of code. They obsess over syntax validation when syntax errors are rare in production. They build evaluation frameworks before building the one thing that actually prevents errors: a semantic layer.

Here's what we wish someone had told us 18 months ago, with the battle scars to prove it.

The Hard Truth About LLM-Generated SQL

If your SQL query runs and returns results, is it correct?

In traditional SQL development: Probably yes.

In LLM-generated SQL: Absolutely not.

In our LLM limitations analysis, we covered why this happens theoretically—LLMs lack true understanding of relational logic. But evaluating 50,000+ real production queries revealed something even more specific: Most broken queries execute successfully and return data.

The problem isn't syntax errors or execution failures. The queries run perfectly. They just answer the wrong question.

This is the heart of the LLM SQL problem: Intent errors that look like working code. And it's why traditional validation approaches (syntax checking, execution testing) fail to catch them.

The Real Failure Distribution

The 6 Failure Modes That Matter (With Real Examples)

Let me show you exactly what breaks in production, with real queries we've seen.

1. Schema Hallucination

In our LLM limitations analysis, we covered why LLMs fabricate schema elements. But evaluating 50,000+ production queries revealed the specific patterns that break most often.

Production example from our query logs:

User asks: "Show me revenue by product category this month"

LLM generates:

Status: ✅ Valid syntax

Status: ✅ Executes successfully

Result: Returns all NULL values

The problem (production-specific):

- In this customer's schema, revenue actually lives in order_items table, not products

- The date column exists as created_at in orders table, not products

- LLM assumed standard e-commerce naming conventions that this schema doesn't follow

Why this is the #1 error type in our 50K+ query dataset: LLMs pattern-match against training data. They see "revenue" and "products" commonly appear together in documentation, so they assume they're in the same table. In production schemas with non-standard naming (legacy systems, acquisitions, domain-specific terminology), this assumption fails constantly.

Real production patterns we've caught:

- Customer uses order_value but LLM generates order_amount (close, but wrong)

- Schema has created_date but LLM uses date or timestamp

- Finance team calls it recognized_revenue, LLM uses revenue

- Multiple id columns with unclear naming: user_id, customer_id, account_id all pointing to different things

How to catch it programmatically:

Better solution (prevention): Use a semantic layer that maps natural language terms like "revenue" to the correct table and column (order_items.order_value) automatically, regardless of your schema's naming conventions.

2. Join Path Errors

User asks: "Which customers bought product X in the last month?"

LLM generates:

Status: ❌ Execution fails

Error: column p.customer_id does not exist

The problem: No direct relationship between customers and products. The actual path is:

customers → orders → order_items → products

Why it happens: LLMs see the entities (customers, products) and invent plausible-looking join keys. They don't understand your actual schema relationships.

Impact: This is one of the most expensive error types because:

- Sometimes it fails execution (you catch it)

- Sometimes it returns wrong results through a different join path (you don't catch it)

How we fixed it at DataBrain:

Define valid relationships in your semantic layer:

Then validate that generated SQL only uses these paths. If LLM tries to join customers directly to products, reject it and suggest the correct path.

Result: Significantly reduced join path errors in production.

3. Wrong Aggregation Logic

User asks: "What's the average order value by customer?"

LLM generates:

Status: ✅ Valid syntax

Status: ✅ Executes successfully

Result: Returns completely wrong numbers

The problem: This calculates average product price, not average order value. It should be:

Why it happens: "Average order value" could mean:

- AVG(order total) per customer ← Correct

- AVG(product price) across customer's orders ← LLM chose this

- SUM(order totals) / COUNT(orders) ← Also valid

LLMs make educated guesses about aggregation semantics. Sometimes they guess wrong.

How to catch it: This is where LLM-as-a-Judge becomes essential (we'll show implementation later).

4. Missing Required Filters

User asks: "Show me active customers by region"

LLM generates:

Status: ✅ Valid syntax

Status: ✅ Executes successfully

Result: Includes inactive customers, trial accounts, deleted records

The problem: Missing WHERE status = 'active' filter. The business definition of "customers" has implicit filters that aren't in the raw question.

Why it matters: This is how you get CFOs making decisions on wrong metrics.

How we fixed it: Pre-defined metrics in the semantic layer:

When someone asks about "customers," the semantic layer automatically applies the business logic.

5. Wrong Table Selection

User asks: "Show me daily revenue"

LLM generates:

Seems reasonable, right?

The problem: Your business actually uses revenue_recognition table (not payments) because:

- Payments include refunds

- Revenue is recognized on delivery, not payment

- Payments can be split across months

Result: Numbers are 15-20% off from finance reports.

Why it happens: LLMs can't infer business logic. They pick the most obvious-sounding table.

Solution: Semantic layer defines the source of truth for each metric.

6. Syntax Errors

Yes, syntax errors happen. But they're the LEAST of your problems because:

- They're immediately obvious

- They fail fast

- They're easy to catch

Don't start here. Start with the semantic errors that pass syntax validation.

If You're Building SQL Evaluation: Here's the Priority Order

Based on production experience, here's the order that gives you the best ROI:

Validation Approaches Comparison

Key insight: Semantic layer is the most effective approach because it prevents errors during SQL generation, not after.

Phase 1: Schema Validation (Week 1)

What it does:

- Verify tables exist

- Verify columns exist

- Verify join keys are valid

Effort: Low (1-2 days)

Impact: High (catches schema hallucinations and table reference errors)

Core implementation:

Cost: ~10ms per query | ROI: Catches most common errors before execution

Phase 2: Execution Testing (Week 2)

What it does:

- Actually run the SQL against a test database

- Validate it executes without errors

- Check result schema matches expectations

Effort: Medium (3-5 days)

Impact: High (catches execution failures, permission errors, type mismatches)

Core implementation:

Phase 3: Semantic Validation with LLM-as-a-Judge (Month 2)

This is where it gets interesting. Schema validation and execution testing catch obvious errors. But how do you catch "correct SQL that answers the wrong question"?

Enter: LLM-as-a-Judge

The pattern:

- Use an LLM to evaluate if generated SQL semantically matches the question

- Compare against expected SQL (if you have it)

- Get structured feedback on what's wrong

Why it works:

- LLMs can reason about semantic correctness

- Catches aggregation mistakes, missing filters, wrong logic

- Provides actionable explanations

Core implementation:

Cost: ~$0.01-0.05 per evaluation | Best for: Benchmark testing, not real-time validation

Cost considerations:

- ~$0.01-0.05 per evaluation (GPT-4)

- For 1,000 benchmark queries: ~$10-50

- Much cheaper than engineer time debugging

When to use it:

- Benchmark testing (compare generated vs expected SQL)

- Production monitoring (spot-check queries for semantic drift)

- Quality gates (require >80 score before execution)

When NOT to use it:

- Real-time validation for every query (too slow/expensive)

- As your only validation layer (use rules first, LLM as backup)

Phase 4: Multi-Dimensional Scoring (Month 3) - Continuous Improvement

Once you have the basics, combine them into a comprehensive scoring system:

Scoring formula:

Benefits:

- Single metric to track over time

- Breakdown shows which dimension is failing

- Weighted scores reflect what matters most

The Build vs Buy Decision

Let's be honest about costs.

Building SQL Evaluation In-House

- Timeline: 2-3 weeks (MVP) → 2-3 months (production-grade)

- Cost: ~$50-80K first year (engineering + infrastructure)

- Ongoing: Part-time maintenance + $100-500/month (APIs + databases)

Using a Platform (DataBrain)

Setup: 1-2 weeks | Cost: Platform subscription (no engineering time) Includes: Schema validation, execution testing, multi-dimensional scoring, semantic layer, historical tracking

When to Build vs Buy

Build if:

- You have <100 benchmark queries

- Simple schema (5-10 tables)

- NL-to-SQL is a side feature, not core product

- You have spare ML engineering capacity

- You need 100% custom evaluation logic

Use a platform if:

- Complex schema (50+ tables)

- Multiple databases/data sources

- NL-to-SQL is a core product capability

- You want to ship in weeks, not months

- You need semantic layer + evaluation integrated

- Multi-tenant architecture with row-level security

The real question: Is SQL evaluation your competitive advantage, or is it infrastructure that supports your actual product?

If it's infrastructure, use a platform. If it's your core IP, build it.

The Semantic Layer Advantage: Prevention > Validation

Here's the uncomfortable truth we learned: The best SQL evaluation strategy is not generating bad SQL in the first place.

As we covered in our NLQ architecture guide, semantic layers improve accuracy from ~55% to 90%+ by giving LLMs structured context. But after evaluating 50,000+ queries, we can now show you which specific error types semantic layers prevent—and how to validate that improvement.

The Traditional Approach (What We Built First)

User Question → LLM → SQL Generation → Validation → Fix if broken → Execute

Problem: You're playing defense. Validation catches errors after generation.

The Semantic Layer Approach (What We Should Have Built)

User Question → Semantic Layer Context → LLM → Constrained SQL → Execute

Advantage: The LLM generates against a governed data model, preventing errors at the source.

As we explained in our LLM limitations post, humans can't memorize schema complexity—LLMs struggle even more. Semantic layers exist to solve this exact problem by encoding schema relationships, business logic, and naming conventions upfront.

Real Impact at DataBrain

Semantic layers dramatically improve SQL accuracy: fewer errors, fewer fixes needed, faster corrections. See our NLQ architecture guide for the ~55% → 90%+ accuracy improvement data.

What a Semantic Layer Provides

What a semantic layer provides:

1. Pre-defined Table Relationships

customers → orders → order_items → products

LLM generates joins using known valid paths. Dramatically reduces join path failures.

2. Metric Definitions

revenue: SUM(order_items.quantity * unit_price)

WHERE orders.status = 'completed' AND refunded = false

User asks "revenue" → LLM uses pre-defined calculation. Eliminates most aggregation mistakes.

3. Column Synonyms

email_address: [email, contact_email, customer_email]

created_date: [signup_date, registration_date, joined_date]

LLM maps natural language to actual column names. Greatly reduces schema hallucinations.

4. Business Logic Filters

"customers" → automatically includes: status='active' AND deleted_at IS NULL

Default filters applied automatically. Eliminates most missing filter errors.

The Combined Effect: Measurable Through Evaluation

This is where our 50,000+ query evaluation dataset becomes valuable. We can now measure what the NLQ architecture guide theorized: semantic layers don't just improve accuracy—they change the distribution of error types.

What our evaluation framework reveals:

Without semantic layer:

- Schema hallucinations dominate error logs

- Join path errors in 1 out of 4 failed queries

- Heavy reliance on post-generation validation

- Most fixes require understanding business logic

With semantic layer:

- Schema errors nearly eliminated (pre-defined relationships)

- Join path errors rare (validated relationships)

- Validation catches edge cases, not structural issues

- Fixes are typically about edge cases, not fundamentals

The evaluation insight: Semantic layers don't just reduce error rate—they change error types. Instead of fixing "wrong table" (requires schema knowledge), you're fixing "missing edge case filter" (requires domain knowledge). The latter is far easier to address systematically.

Battle-Tested Patterns We Learned the Hard Way

Pattern 1: Validate Fast, Then Deep

Don't run expensive LLM evaluation on queries that fail basic schema checks.

Validation pipeline:

Cost savings: By failing fast on schema/execution errors, we avoid expensive LLM calls for queries that are obviously broken.

Pattern 2: Benchmark Tiers, Not Single Pass/Fail

Not all queries are equally important. Tier your benchmarks:

This lets you:

- Gate deployments on critical query accuracy

- Track overall system health

- Prioritize what to fix

Pattern 3: Collect Production Failures to Improve Benchmarks

Your benchmark queries should reflect actual usage.

Process:

- Log all production queries (question, SQL, success, user feedback)

- Weekly: Query for patterns: WHERE success = false OR user_feedback = 'incorrect'

- Add common failures (≥3 occurrences) to benchmark suite

- Re-test to ensure they're fixed

Result: Benchmark suite evolves from hand-written queries to cover real user patterns

Pattern 4: Monitor Semantic Drift Over Time

Your data changes. Your LLM may change. Track accuracy trends:

What to monitor:

- Weekly average evaluation scores

- Score drop >5 points = alert

- Query volume trends

Catches:

- Schema changes breaking queries

- LLM updates changing behavior

- New query patterns the system can't handle

Implementation: Track AVG(overall_score) by week, alert on significant drops

Common Mistakes to Avoid

Mistake 1: Building Logical Equivalence Testing First

What teams do: Spend months building complex query comparison that checks if two queries are semantically equivalent.

Why it's wrong: This catches very few real errors but takes most of the development time.

Do this instead: Start with schema validation. It catches far more errors with much less effort.

Mistake 2: Only Testing Happy Paths

What teams do: Benchmark queries like "What's total revenue?"

Why it's wrong: Real users ask messy questions: "show me customers who bought X but not Y in Q3 excluding refunds."

Do this instead: Include edge cases in your benchmark suite:

- Multi-hop joins

- Complex filters with AND/OR

- Negations ("customers who did NOT...")

- Time-based aggregations

- NULL handling

Mistake 3: Treating All Errors Equally

What teams do: PASS/FAIL scoring where 79% = 99% = FAIL

Why it's wrong: A missing ORDER BY is not the same as using the wrong table for revenue.

Do this instead: Severity-based scoring:

Mistake 4: Not Validating Validation

What teams do: Assume their evaluation system is correct.

Why it's wrong: We've found cases where "failing" queries were actually correct—our validator was wrong.

Do this instead:

- Manually review random samples of "failures"

- Track false positive rate

- Have humans grade a subset, compare to LLM-as-a-Judge scores

What You Should Do Next

If evaluating NL-to-SQL solutions: Ask vendors about validation approach (not just syntax), semantic layer usage, and request benchmark results with your schema complexity.

If building in-house: Start with schema validation + execution testing + 20 benchmark queries. Skip complex logical equivalence and elaborate UIs initially.

If you want to skip the build: DataBrain includes multi-dimensional evaluation, semantic layer, benchmark management, and LLM-as-a-Judge validation built-in. Start free trial →

Frequently Asked Questions (FAQ)

What is the best way to validate LLM-generated SQL?

The best approach combines multiple layers:

- Start with schema validation (fast, catches most common errors like hallucinated tables/columns)

- Add execution testing (verifies queries actually run)

- Use a semantic layer for prevention (stops errors before generation)

- Implement LLM-as-a-Judge for semantic correctness (validates the SQL answers the actual question)

Key insight: Don't rely on a single validation method. Schema validation + semantic layer + selective LLM evaluation gives the best results in production.

Should I build or buy SQL evaluation tools?

Build if:

- You have <100 benchmark queries and simple schema

- NL-to-SQL is a side feature, not core to your product

- You have spare ML/data engineering capacity

- Total cost: ~$50-80K first year (engineering time)

Buy/Use a platform if:

- Complex schema (50+ tables) or multiple databases

- NL-to-SQL is core to your product

- You need to ship in weeks, not months

- You want semantic layer + evaluation integrated

- Setup time: 1-2 weeks

The deciding factor isn't capability (you can build anything) but opportunity cost. Is SQL evaluation your competitive advantage, or supporting infrastructure?

How do you catch semantic errors in SQL?

Semantic errors (correct syntax, wrong logic) are the hardest to catch. Here's how:

1. Semantic Layer (Prevention)

- Define relationships, metrics, and business logic upfront

- LLM generates against governed data model

- Prevents most semantic errors before they occur

2. LLM-as-a-Judge (Detection)

- Use a second LLM to evaluate if generated SQL matches question intent

- Compares semantic meaning, not just syntax

- Provides actionable feedback on what's wrong

3. Golden Dataset Testing

- Maintain benchmark queries with expected outputs

- Test new SQL generation against known-good examples

- Track accuracy trends over time

4. Multi-Dimensional Scoring

- Evaluate across syntax, semantics, execution, efficiency, and schema

- Weighted scoring shows which dimension is failing

- Helps prioritize fixes

In production, the semantic layer is your best defense. Validation catches what slips through.

What are the most common LLM SQL generation errors?

Based on our 50,000+ query evaluation dataset, here are the top failure modes ranked by frequency and production impact:

- Schema hallucination - LLM invents tables or columns that sound right but don't exist

- Production examples: revenue in products table (actually in order_items), date column that's actually called created_at, financial metrics like recognized_revenue that LLM shortens to revenue

- Why it happens: Non-standard naming conventions, legacy schemas, domain-specific terminology

- Incorrect join paths - Wrong relationships between tables or missing intermediate joins

- Production examples: Direct join from customers to products (missing orders and order_items intermediate tables)

- Wrong aggregation logic - AVG vs SUM, incorrect grouping, mixing aggregated and raw data

- Missing required filters - Business logic filters (like "active customers only") not applied

- Wrong table selection - Choosing denormalized table when granular fact table is needed

Critical insight from evaluation data: Syntax errors are rare. The vast majority of failures are semantic issues that pass syntax validation but return wrong results. This is why traditional testing approaches (syntax checking, execution testing) catch <10% of real production errors.

How much does it cost to build SQL evaluation in-house?

DIY Timeline:

- Phase 1 (Minimum viable): 2-3 weeks

- Phase 2 (Production-grade): 2-3 months

- Ongoing: Part-time engineering effort

Costs:

- First year: ~$50-80K (engineering time)

- Ongoing: $100-500/month (LLM API + test DB)

Platform alternative: 1-2 weeks setup, no engineering investment

Can I use the code examples from this guide?

Yes! All code examples are MIT licensed and production-tested. Install with: pip install sqlparse sqlalchemy openai

Conclusion

After extensive production experience with SQL evaluation, here's what matters:

- Schema validation catches the most common errors with minimal code. Start here.

- Semantic layer prevents most errors at the source. Build this before complex validation.

- LLM-as-a-Judge is the right tool for semantic correctness, but use it strategically (not for every query).

- Multi-dimensional scoring beats binary PASS/FAIL. Track trends over time.

- Validation is infrastructure, not competitive advantage. Consider whether you should build it.

The goal isn't perfect SQL generation—that's impossible. The goal is catching errors before they reach users, and continuously improving accuracy over time.

If you're building NL-to-SQL, you need evaluation. The question is: will you spend 3 months building it, or 3 days integrating it?

Ready to see it in action? Explore DataBrain's embedded analytics platform →

Requirements & Code Dependencies

Core dependencies for examples in this guide:

pip install sqlparse sqlalchemy openai

Note: All code examples are production-tested patterns and MIT licensed for your use.

Questions? Want to discuss your SQL evaluation strategy?

📧 support@usedatabrain.com

Accessibility Note

All code examples in this article include descriptive context. For screen reader users:

- Code blocks are preceded by explanatory text describing their purpose

- Python examples demonstrate schema validation, execution testing, and LLM-based evaluation patterns

- SQL examples show both incorrect (with issues) and correct (fixed) query versions