Why Self-Hosted Embedded Analytics Matter in 2026

.png)

Why Self-Hosted Embedded Analytics Matter in 2026

Container-based deployment has eliminated the trade-off between control and convenience

Five years ago, self-hosted analytics meant a six-month implementation, a dedicated infrastructure team, and features that lagged behind cloud alternatives. The conventional wisdom was clear: accept the overhead of cloud-hosted analytics, because the self-hosted alternative was worse.

That calculus has fundamentally changed. Container orchestration, infrastructure-as-code, and modern deployment patterns have collapsed the complexity barrier. Today, a production-ready self-hosted analytics platform deploys in minutes—not months. For companies embedding analytics into their products, this shift opens up architectural options that were previously impractical.

Is Self-Hosted Right for You?

Self-hosted makes sense when:

- You're embedding analytics in a product serving hundreds or thousands of end users

- Data residency, compliance, or contractual obligations prevent cloud usage

- You need sub-10ms query latency for real-time analytics

- Your customers require it for their security policies

- You want predictable costs vs. per-user pricing

Stick with cloud-hosted when:

- You're a small team (< 5 people) moving fast without DevOps capacity

- Data sensitivity is minimal

- You need elastic scaling for unpredictable usage

- Infrastructure isn't a competitive differentiator

If you're in the first category, read on.

What Changed: The Technical Shift

From Installation Scripts to Container Images

The single biggest change is containerization. Modern self-hosted analytics ships as Docker images:

Legacy self-hosted (pre-2020):

1. Provision servers (2-3 days)

2. Install OS dependencies (half day)

3. Configure database (half day)

4. Run installation wizard (2-4 hours)

5. Debug environment-specific issues (1-3 days)

6. Configure networking/SSL (1 day)

7. Test and validate (1-2 days)

Total: 1-2 weeks minimum

Modern self-hosted:

Total: 10-15 minutes

Same outcome. Fraction of the time. No specialized knowledge required beyond basic Docker familiarity.

Architecture: What Gets Deployed

A typical self-hosted embedded analytics deployment includes:

Your Infrastructure

- Frontend (React) -> Backend (API) -> Config (DB)

- Backend -> Query Engine

- Query Engine -> Your Data Warehouse (Snowflake / BigQuery / Postgres / etc.)

Key point: Query traffic flows directly from the analytics engine to your data warehouse. No intermediate cloud. No data leaving your network boundary.

Stateless Design Enables Simple Operations

Well-architected platforms separate concerns:

This means:

- Scaling: Add container replicas behind a load balancer

- Updates: Rolling deployment with zero downtime

- Recovery: Restore config DB + redeploy containers = back online

Database Connectivity

For embedded analytics, this is where self-hosted provides the most architectural value.

Network Path Comparison

For financial services platforms processing real-time trading data, fraud detection, or payment analytics, this latency difference and network isolation are often deal-breakers for compliance and performance. Similarly, gaming analytics, IoT dashboards, and operational intelligence platforms benefit from sub-10ms query response times.

Supported Databases

Modern platforms connect to everything:

Cloud Warehouses

- Snowflake (including PrivateLink)

- Google BigQuery (VPC Service Controls compatible)

- Amazon Redshift

- Databricks (Unity Catalog)

Relational Databases

- PostgreSQL, MySQL, MariaDB

- Microsoft SQL Server

- Oracle Database

OLAP / Analytics Engines

- ClickHouse

- Apache Druid

- Trino / Presto

- DuckDB

Connection Security

- Direct connection (within VPC)

- SSH tunnel (bastion host)

- VPC peering / PrivateLink

- SSL/TLS with certificate verification

Multi-Tenancy for Embedded Analytics

If you're embedding analytics into your product, your customers expect data isolation. Here's how modern platforms handle it:

Row-Level Security at Query Time

The most robust approach injects tenant context into every query:

This happens at the query layer—before data is retrieved. No application-level filtering that could be bypassed.

N-Level Tenancy

Real-world organizational structures are complex. Platforms should support arbitrary nesting:

Or for SaaS embedding:

Each level can have:

- Independent data access rules

- Separate branding/theming

- Different permission sets

- Isolated credentials

The depth is configurable not hardcoded to a specific number of levels.

Token-Based Embedding

For seamless integration, analytics embeds via signed tokens:

Stateless. No cookies. No session management. The token carries all context needed to scope data access.

Security Architecture

Self-hosted analytics runs inside your security perimeter, but architecture still matters:

Network Isolation

Standard Deployment

- Deploy in private subnets (no public internet access)

- Require VPN or bastion for admin access

- Database connections via private endpoints or VPC peering

- Load balancer terminates SSL/TLS

Air-Gapped Deployment

For defense contractors, government agencies, critical infrastructure, and highly regulated enterprises, air-gapped deployment provides maximum isolation:

- Complete network isolation: No outbound internet connectivity

- Offline installation: All container images, dependencies, and packages delivered via secure media

- No vendor phone-home: Zero telemetry, no license checks requiring internet access

- Offline updates: Security patches and feature updates delivered via encrypted archives

- Disconnected documentation: Complete docs packaged for offline browsing

Air-gapped use cases:

- Defense and intelligence analytics

- Nuclear facility operational dashboards

- Critical infrastructure (power grid, water systems)

- Financial trading floor analytics (market data isolation)

- Healthcare research (HIPAA + institutional review board requirements)

- Government contractor analytics (FedRAMP High, ITAR)

The deployment process for air-gapped environments:

Authentication

- SSO via SAML/OIDC: Okta, Azure AD, Ping Identity, OneLogin, Auth0

- Service accounts: Least-privilege IAM roles for programmatic access

- Encrypted credentials: All database credentials encrypted in config database

- MFA support: Two-factor authentication for admin access

Encryption

- TLS 1.2+ for all internal communication (configurable to 1.3)

- At-rest encryption for config database (AES-256)

- Customer-managed encryption keys (CMEK): Integration with AWS KMS, Azure Key Vault, GCP Cloud KMS

- Encrypted backups: All backup archives encrypted before storage

Audit Logging

- Query logs: Who ran what query, when, and against which data sources

- Authentication events: Login attempts, SSO flows, session creation/termination

- Configuration changes: Dashboard modifications, user permission changes, data source updates

- SIEM integration: Export logs to Splunk, Datadog, Elastic, or custom log aggregators

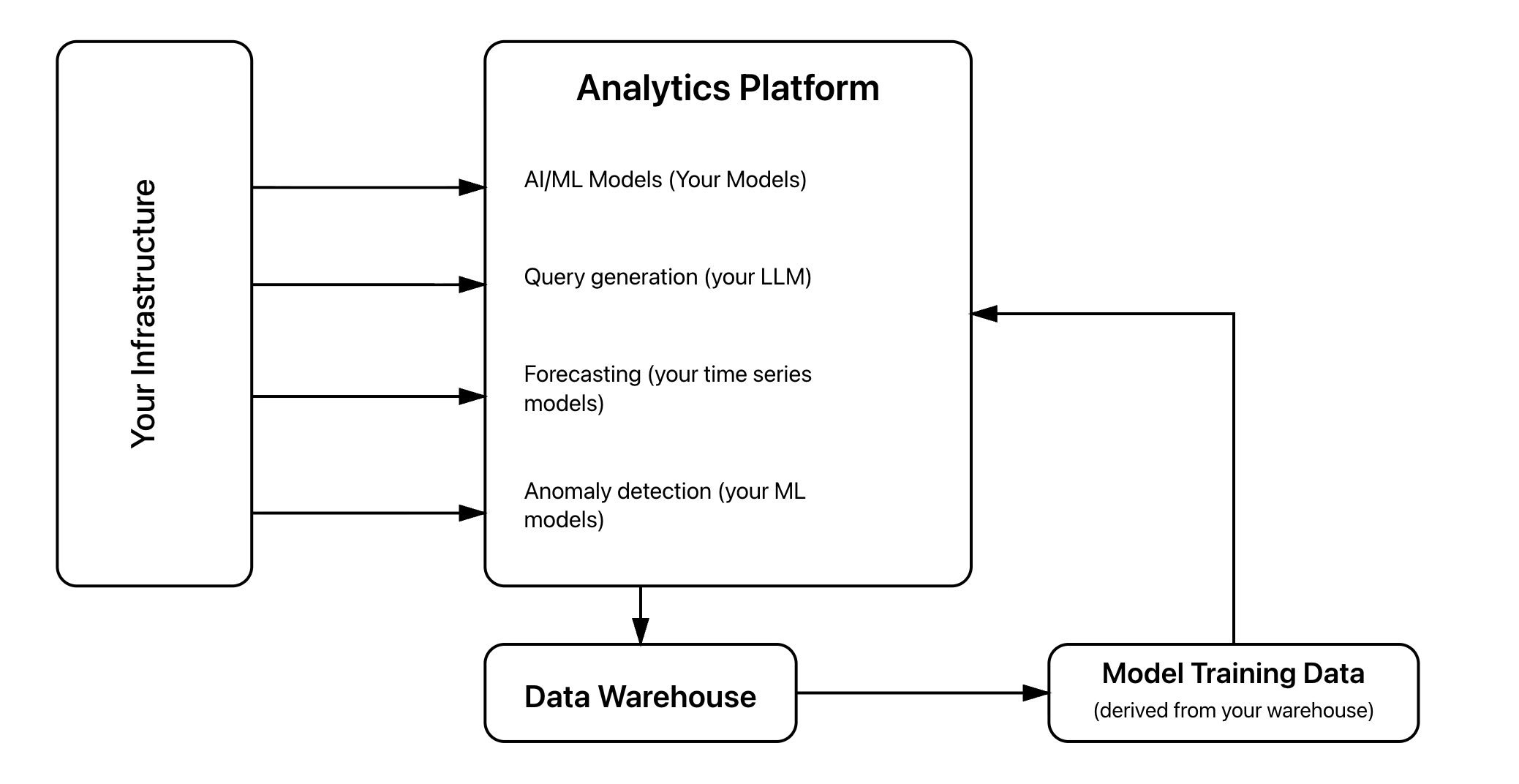

AI and ML for Self-Hosted Analytics

As embedded analytics evolves, AI capabilities are becoming table stakes. Self-hosted deployment gives you control over how AI models access and process your data.

Bring Your Own Model (BYOM)

Self-hosted analytics supports Bring Your Own Model architecture, where you maintain complete control over your AI infrastructure:

- Your models, your data: Customers bring their own fine-tuned models—DataBrain never trains on your data

- Zero external API calls: All AI inference happens within your infrastructure

- Complete data governance: No data leaves your environment for model training or evaluation

- Model versioning: Your MLOps pipeline controls model deployments and rollbacks

- Compliance-aligned: Perfect for regulated industries where model transparency and data residency are non-negotiable

BYOM use cases:

- Financial institutions with proprietary risk models

- Healthcare systems with custom diagnostic analytics

- Enterprises with sensitive business logic in ML models

- Organizations subject to strict data governance requirements

Natural Language Querying

Modern analytics platforms can include AI-powered query generation that runs entirely within your infrastructure:

Self-hosted advantages:

- Your data never leaves your infrastructure to power external LLMs

- Queries execute with your row-level security rules automatically applied

- No prompt injection risks from cloud-hosted AI services

- Fine-tuned models optimize for your domain-specific terminology

Predictive Analytics and Forecasting

Self-hosted platforms can run ML models for:

- Time series forecasting: Revenue projections, demand prediction, capacity planning

- Anomaly detection: Unusual patterns in transactions, user behavior, system metrics

- Cohort analysis: Customer lifetime value prediction, churn risk scoring

- What-if scenarios: Simulate business outcomes based on parameter changes

Data governance for AI:

When AI models run in your infrastructure:

Your Infrastructure

- Analytics Platform

- AI/ML Models (Your Models)

- Query generation (your LLM)

- Forecasting (your time series models)

- Anomaly detection (your ML models)

- Model Training Data (derived from your warehouse)

- Data Warehouse

Control points:

- Models trained exclusively on your data

- No data egress to third-party AI services

- Model versioning and governance in your MLOps pipeline

- Explainability tools running locally for compliance

Compliance advantages:

For regulated industries (financial services, healthcare, government), self-hosted AI analytics addresses:

- EU AI Act requirements: High-risk AI systems must maintain detailed logs, explainability, and human oversight—easier when you control the infrastructure

- Data minimization: AI models trained only on data necessary for analytics, not shipped to external services

- Right to explanation: Users can see which data influenced AI-generated insights

- Bias monitoring: Your data science team can audit model behavior directly

Automated Insights

Self-hosted analytics with AI can automatically:

- Detect significant changes in metrics and alert users

- Recommend relevant dashboards based on user behavior

- Suggest data relationships and correlations

- Generate natural language summaries of dashboard data

All of this happens within your security boundary without exposing sensitive business intelligence to external AI providers.

Deployment Options

Different organizations have different requirements. Modern platforms offer flexibility:

Fully Self-Hosted (On-Premise or Private Cloud)

You run everything. All components in your infrastructure.

Your VPC / Data Center

- Analytics frontend (container)

- Analytics backend (container)

- Query engine (container)

- AI/ML models (optional, bring your own)

- Config database (managed or container)

- Your data warehouse

- Complete network isolation

- No external dependencies at runtime

- You control update timing

- Air-gapped deployment supported

- Data residency guaranteed

Good for:

- Highly regulated industries (financial services, healthcare, government)

- Defense contractors and critical infrastructure

- Organizations with strict data sovereignty requirements

- Enterprises with existing on-premise infrastructure investments

VPC Peering

- Reduced operational burden

- Data queries execute over private connection

- Vendor handles updates and monitoring

- No data leaves your VPC

Good for:

- Teams wanting managed operations while keeping data internal

- Cloud-native SaaS companies on AWS/Azure/GCP

- Organizations with modern DevOps but limited spare capacity

Hybrid

Different models for different customers.

- Self-hosted instances for enterprise customers

- Cloud for smaller customers

- Same platform, same features, different deployment

Good for:

- Platforms serving diverse customer segments

- Companies with both SMB and enterprise clients

- Organizations transitioning from cloud to self-hosted

Operations

Updates

Modern platforms make updates trivial:

For Kubernetes:

For air-gapped environments:

Monitoring

Integrate with your existing observability:

- Metrics: Prometheus, Datadog, CloudWatch, New Relic

- Request latency, query duration, error rates

- Cache hit ratios, database connection pool usage

- Container resource utilization (CPU, memory, disk I/O)

- Logs: Ship to your log aggregator

- Query logs, auth events, errors

- Structured JSON logs for easy parsing

- Alerts: Standard thresholds

- Health check failures, elevated error rates

- Query performance degradation

- Authentication failures (potential security incidents)

Backup and Disaster Recovery

What matters:

- Config database: Dashboard definitions, user settings, encrypted credentials

- User-uploaded content: Custom logos, themes, embedded resources

What doesn't need backup:

- Container images (pulled from registry or rebuilt)

- Query cache (ephemeral, rebuilt on startup)

- Temporary files and session data

Backup strategy:

- Automated daily snapshots of config database

- Encrypted backups to S3, Azure Blob Storage, or Google Cloud Storage

- 30-day retention with 7-day point-in-time recovery

- Cross-region replication for disaster recovery

Recovery process:

- Restore config database from latest snapshot

- Deploy containers (docker-compose up or kubectl apply)

- Verify health checks and connectivity to data sources

- Validate dashboards and user access

Recovery Time Objective (RTO): about 15 minutes for standard deployments

Recovery Point Objective (RPO): Last successful backup (typically less than 24 hours)

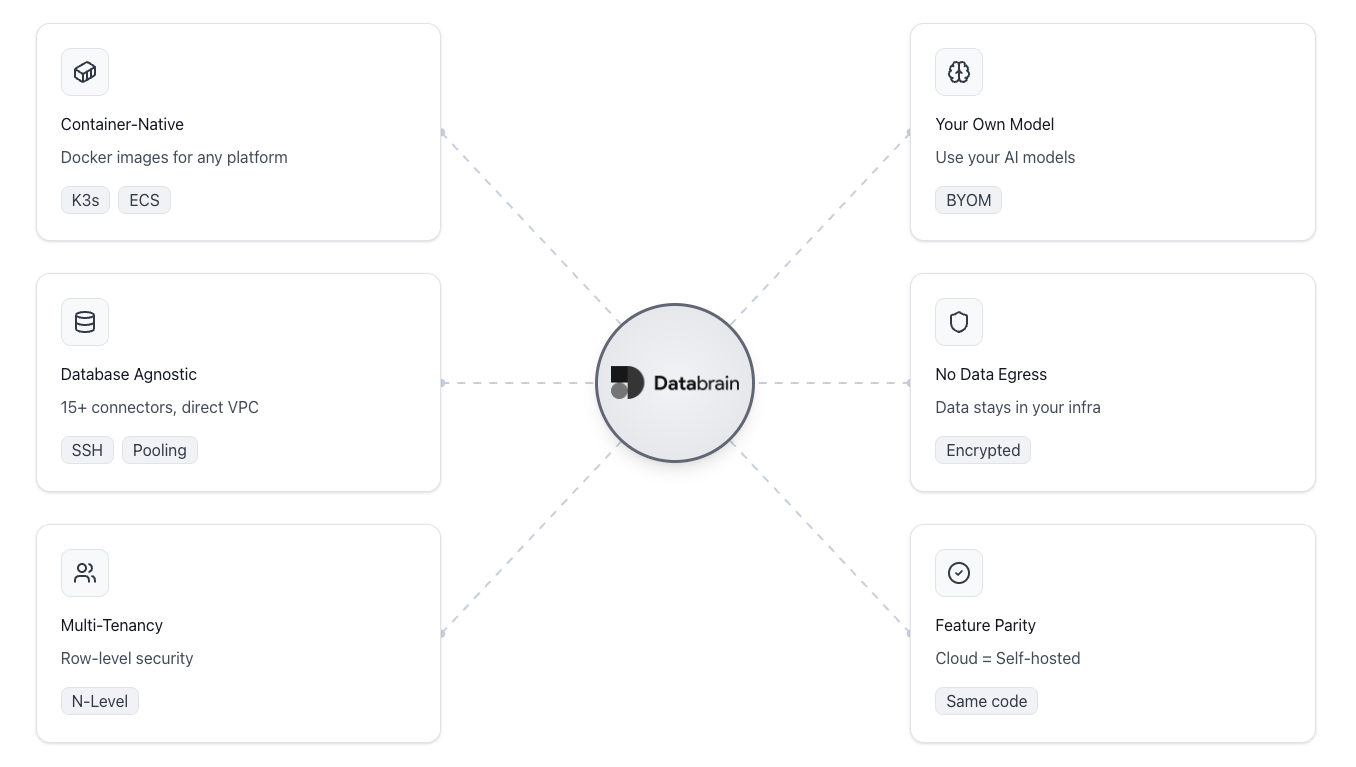

DataBrain's Approach

DataBrain is built for self-hosted deployment without compromising features:

Container-Native: Ships as Docker images. Works with docker-compose, Kubernetes, ECS, or any container platform.

Database Agnostic: 15+ connectors. Direct VPC connectivity. SSH tunneling. Connection pooling built-in.

N-Level Multi-Tenancy: Row-level security at the query layer. Arbitrary organizational hierarchies. Token-based embedding.

Bring Your Own Model (BYOM): AI features that use your models, not ours. No data training. Complete control over ML infrastructure.

No Data Egress: We store dashboard definitions and encrypted credentials. Your business data never leaves your infrastructure.

Feature Parity: Everything available in cloud is available self-hosted. Same codebase, your infrastructure.

Air-Gap Ready: Complete offline deployment support for defense, government, and critical infrastructure use cases.

Getting Started

1. Evaluate your requirements

- Where does your data live?

- What databases do you need to connect?

- Do you need air-gapped deployment?

- What's your team's container experience?

2. Try a deployment

- Spin up with docker-compose locally

- Connect a test database

- Build a dashboard with AI-powered queries

3. Plan production

- Single-node vs. Kubernetes deployment

- Monitoring and observability integration

- Backup and disaster recovery strategy

- SSO and authentication requirements

Talk to us for access to self-Hosted DataBrain

Schedule a Technical Walkthrough

For Specific Industries

Financial Services

Self-hosted analytics solves specific compliance challenges around data residency, third-party risk, and audit requirements. Learn how banks, fintechs, and insurance companies deploy analytics without vendor friction.

See our Financial Services Solutions

Healthcare

HIPAA-compliant analytics deployment with patient data remaining in your infrastructure. Support for on-premise EHR systems and clinical research environments.

Healthcare Analytics Solutions

Frequently Asked Questions

What is self-hosted embedded analytics?

Self-hosted embedded analytics refers to analytics platforms that run entirely within your own infrastructure—whether in your AWS, Azure, or GCP VPC, private cloud, or on-premise data center. Unlike cloud-hosted analytics that process data in vendor-controlled environments, self-hosted solutions give you complete control over where analytics workloads run, where data is processed and stored, and who can access it. For companies embedding analytics in their products, this means analytics data never leaves your security perimeter, simplifying compliance and reducing third-party risk.

How does self-hosted analytics help with data residency compliance?

Self-hosted analytics eliminates cross-border data transfers. When dashboards and queries execute entirely within your chosen region or data center, you can guarantee data residency requirements for GDPR (EU), data localization laws (Russia, China, India), and industry-specific regulations (financial services, healthcare). You can provide auditors with network diagrams proving data never leaves your jurisdiction, and you avoid complex Data Processing Agreements with analytics vendors.

What's the difference between SOC 2 Type I and Type II for analytics?

SOC 2 Type I assesses whether security controls are designed appropriately at a point in time. SOC 2 Type II evaluates whether those controls operate effectively over a period (typically 6-12 months). For analytics vendors, Type II is the minimum expectation. Self-hosted analytics lets you rely on your own SOC 2 Type II controls and evidence for the analytics stack, rather than depending on a separate vendor's certification—simplifying audit processes and third-party risk assessments.

Can self-hosted analytics integrate with Snowflake, Databricks, and other modern warehouses?

Yes. Modern self-hosted analytics platforms connect directly to cloud data warehouses and databases including Snowflake, Databricks, BigQuery, Redshift, PostgreSQL, MySQL, SQL Server, and modern OLAP systems like ClickHouse and Trino. Connectivity is typically secured via VPC peering, PrivateLink, SSH tunnels, or private endpoints. Data stays in your warehouse; the self-hosted analytics engine runs queries in place and renders dashboards inside your application.

What are the infrastructure requirements for self-hosted analytics?

Most self-hosted analytics platforms run as containerized applications. A common starting point for a production deployment serving hundreds of concurrent users might be a small Kubernetes cluster or VM setup with 4-8 vCPUs, 16-32 GB RAM, and 100-500 GB of storage for metadata and caching. This can be scaled horizontally or vertically as usage grows. Because analytics runs alongside your existing application and data infrastructure, you can reuse your existing observability, logging, and backup tooling. For air-gapped environments, all dependencies and images are provided offline.

What is Bring Your Own Model (BYOM) and how does it work?

Bring Your Own Model (BYOM) is an AI architecture where you maintain complete control over your models and data. Instead of DataBrain training models on your data, you bring your own fine-tuned models and integrate them with the analytics platform. This approach ensures zero data egress, complete data governance, and compliance with regulations that require model transparency. Your models run entirely within your infrastructure—perfect for financial services, healthcare, and other regulated industries.

What's the difference between self-hosted analytics and open-source analytics?

Self-hosted analytics refers to the deployment model (running in your infrastructure), while open-source refers to the licensing model (code is publicly available). You can have commercial self-hosted analytics (like DataBrain) or open-source self-hosted analytics (like Metabase or Superset). Commercial self-hosted typically includes enterprise features like advanced SSO, row-level security, white-label embedding, commercial support, guaranteed security patches, and AI-powered capabilities, while open-source requires more DIY integration and maintenance.

DataBrain is an embedded analytics platform that deploys where you need it. Learn more at usedatabrain.com

.png)

.png)