Production Text-to-SQL Systems: Why Architecture Matters More Than Model

.png)

If you’re evaluating LLMs for analytics, you’re usually asking two questions at the same time:

- Should we use RAG (Retrieval-Augmented Generation) or fine-tuning for SQL generation?

- How do we ensure query accuracy improves over time instead of degrading?

The most important truth is also the least marketed: production text-to-SQL is an architecture problem, not a model-selection problem. On real enterprise schemas with business logic and ambiguous metric definitions, “one-shot prompt → SQL” is brittle. It can look great in a demo and quietly produce wrong dashboards in production.

The framing that causes teams to fail is: “RAG vs fine-tuning”

The framing that works is: “Which failure modes are we trying to solve, and what system design prevents them?”

In production analytics, RAG, semantic layers, fine-tuning, and feedback loops aren’t competing ideas. They’re different tools for different failure modes:

- RAG grounds the model in the current schema and definitions.

- Semantic layers reduce ambiguity and enforce governed metric logic.

- Fine-tuning improves consistency and pattern learning when patterns are stable.

- Feedback loops turn corrections and runtime signals into continuous improvement.

If you want a system that gets more accurate with usage, the winning pattern is:

Semantic layer + schema-aware retrieval (RAG) + evaluation + feedback loops

Optional: light fine-tuning for stable, repetitive query families.

Why SQL generation is harder than “text”

LLMs are excellent at language. Analytics is not a language problem. Text-to-SQL sits at the intersection of:

- Business definitions (what “revenue” means here)

- Schema correctness (tables/columns, types, constraints)

- Join logic (relationship paths, grain alignment)

- Time semantics (cohorts, SCD, “last month” definitions)

- Governance (RBAC, row-level security, multi-tenant constraints)

A query that runs and returns results can still be semantically wrong. In BI, “confident but wrong” is worse than “can’t answer”. That’s why generic advice like few-shot prompting breaks down: you’re not optimizing for fluency. You’re optimizing for:

- Execution correctness (does it run?)

- Semantic correctness (does it answer the intended question?)

- Consistency (does it behave reliably over time?)

Those goals require architecture.

The core decision framework (what to build first, and why)

Start with RAG when

RAG is your fastest path to credible production results when:

- Schemas evolve (new tables, new columns, frequent changes)

- Metrics are governed and change (definitions evolve)

- You need a working system in weeks, not months

- You can accept good-but-not-perfect v1 accuracy

- You want changes in business logic to take effect without retraining

Add a semantic layer early when

This is where analytics teams usually win or lose:

- You have 50+ tables, or multiple data domains

- Terms are ambiguous: revenue, churn, MRR, active users, “conversion”

- Multi-tenant usage means accuracy per tenant matters

- You need permission-aware generation

- You care about consistent definitions across dashboards, not just SQL that runs

Justify fine-tuning later when

Fine-tuning is powerful, but only under the right conditions:

- Query patterns are stable for months

- You have hundreds to thousands of validated examples

- Your RAG+ semantic layer foundation is already working

- You need consistent output style and fewer recurring reasoning errors

- You have ML/ops capacity to manage training + evaluation + deployment

Invest in feedback loops once usage is real

Feedback loops are what turn “demo” into “product”:

- You’re past pilot: tens of queries per week minimum

- You can build an evaluation harness (golden set + scoring)

- You can log context snapshots alongside outputs

- You want accuracy to improve with usage, not drift

What RAG means for analytics

Most RAG definitions say “retrieve documents and inject them into the prompt”. For analytics, that’s incomplete.

RAG for text-to-SQL is grounding the model in trusted, schema-aware context.

Your retrieved “documents” are not PDFs, they are structured metadata and governed definitions:

- Curated table/column descriptions (not raw information_schema dumps)

- Join graphs and relationship constraints

- Semantic model definitions: metrics, dimensions, grains

- Temporal rules: canonical date fields, timezone conventions, cohort windows

- Filter constraints: allowed filters, “safe” defaults, permission rules

The goal is to reduce guessing. Without retrieval, the model invents. With schema-aware retrieval, the model references documented truth.

What not to do with RAG for SQL

- Don’t dump entire schemas into context

- Don’t rely on embeddings over raw table names only

- Don’t omit join metadata and grain

- Don’t ignore temporal semantics

- Don’t skip permissions and governance constraints

What to do instead

- Retrieve a curated subset of relevant tables/metrics (often 10–50 items)

- Include explicit join paths and grain alignment notes

- Provide canonical metric definitions and allowed dimensions

- Add permission constraints (tenant filters, RBAC, sensitive columns)

- Provide temporal “rules of the road” (what “last month” means here)

Why RAG is powerful for text-to-SQL

1) Schema awareness without retraining

RAG lets the model “see” what exists now:

- Tables, Columns

- Relationship Paths

- Constraints and Naming Conventions

When schemas change, you update metadata and retrieval indexes, not model weights.

2) Metric governance and consistency

Most wrong queries aren’t SQL syntax failures, they’re definition failures.

If “Revenue” has a canonical definition, RAG + semantic layer can enforce it:

- Revenue = completed orders only

- MRR excludes trials and adjusts for mid-month plan changes

- Active user has a specific activity event definition

This prevents silent re-interpretation.

3) Lower cost and faster iteration

- Update a metric definition: minutes

- Update retrieval indexing: minutes to hours

- Retrain a tuned model: hours plus validation and rollout risk

For fast-moving teams, RAG is operationally aligned with how analytics evolves.

Where RAG breaks down (and why people overpromise)

RAG grounds the model. It does not automatically teach better reasoning.

RAG struggles when:

- Complex multi-step reasoning is required (nested windows, advanced cohort logic)

- The same reasoning mistake repeats despite correct context

- You need strict formatting / output structure

- You need performance-aware query construction (e.g., using materializations)

The core limitation: RAG gives the model what to reference, not how to think.

That’s where fine-tuning helps.

What fine-tuning actually does for SQL

Fine-tuning changes model behavior by teaching patterns:

- How your organization writes SQL (style, idioms, preferred join strategies)

- Common table selection patterns for business questions

- Stable metric and cohort logic patterns

- Consistent formatting and structure

- Reduced repeated failure modes for known query families

Fine-tuning works best when the domain is stable and your examples are high quality.

When fine-tuning works well

- Stable schemas and metric logic exist

- High-quality labels (validated SQL, not guessed)

- Repetitive query classes (e.g., the same analysis across regions/segments)

- Clear success criteria and evaluation harness

The real downsides (the operational truth)

Fine-tuning is:

- expensive (data curation, training, validation, hosting)

- slower to iterate than prompts/metadata

- brittle to schema changes (weights learn old patterns)

- a drift risk if not monitored

Once you fine-tune, schema/definition changes become release events unless you keep RAG + semantic grounding strong.

Practical principle:

Treat fine-tuning as an accelerator for stable patterns, not a replacement for grounding and governance.

Semantic layer: the missing “truth layer” in most LLM BI

A semantic layer is the system’s canonical definition of:

- metrics

- dimensions

- grains

- join paths

- allowed filters

- permissions and tenant rules

- time semantics

In production text-to-SQL, the semantic layer reduces ambiguity and increases correctness more reliably than any prompt trick.

Why it matters

When a user asks:

“What was churn last month by plan?”

The model must know:

- churn definition (logo vs revenue churn, cohort rules)

- the correct time window (calendar vs trailing)

- where plan lives (subscription table? product dimension?)

- grain alignment and allowed joins

- permission constraints

If those definitions aren’t explicit, the model guesses. Your “accuracy” becomes a marketing number, not a reliable system.

What “semantic layer + RAG” looks like in practice

- intent parsed

- retrieval pulls relevant metrics + dimensions + join paths

- SQL planned using governed definitions

- SQL generated, then validated

This is why most serious systems converge toward semantic grounding.

Databrain’s Semantic Layer:

Feedback loops

RAG and fine-tuning influence how the model generates. Feedback loops define how it improves over time.

Feedback signals in analytics include:

- analyst correction to SQL

- rejected result (“this doesn’t match expectations”)

- filter adjustments

- join removed or replaced

- “metric override” events (definition gap)

- runtime errors and execution feedback

If you don’t capture and use these signals, the system repeats mistakes indefinitely.

A pragmatic feedback loop architecture

Log the entire interaction as a context snapshot:

- question

- retrieved context (tables/metrics/join paths)

- generated SQL

- execution outcome (success/error)

- corrected SQL (if any)

- semantic outcome (accepted/rejected)

- metadata (timestamp, tenant, permissions, risk tier)

Then route the signals into three improvement channels:

Immediate (weekly): prompt + retrieval tuning

- common failure patterns

- missing join paths or ambiguous definitions

- retrieval over/under-inclusion

Medium-term (monthly): retrieval ranking optimization

- reweight indices based on what context was actually needed

- improve schema linking and synonym dictionaries

- adjust chunking strategy for definitions

Long-term (quarterly+): fine-tuning dataset curation

- only high-confidence, validated examples

- focus on stable query families

- treat tuning as optional and measured

Execution-guided feedback

One of the most practical signals in SQL generation is objective:

- Did the query run?

- Did it error?

- Did it return empty results?

- Did it violate constraints?

Execution feedback enables iterative correction loops:

- generate → execute → capture error → regenerate with error context

This doesn’t solve semantic correctness by itself, but it dramatically improves robustness and reduces failure modes like invalid columns or wrong function usage.

The modern production architecture

The “one-shot SQL” era is fading. The modern pattern is multi-step:

- intent parsing (what is being asked?)

- schema linking + retrieval (what is relevant?)

- planning (joins, grains, metrics)

- SQL generation

- static checks (permissions, risky patterns)

- execution (safe, read-only)

- correction loops (if error)

- semantic validation gates (accept/reject)

- feedback logging

This is the architecture that aligns with enterprise BI requirements: correctness, governance, and continuous improvement.

A practical comparison table

Key insight: Most teams should prioritize semantic layer + RAG + evaluation + feedback, then use fine-tuning surgically.

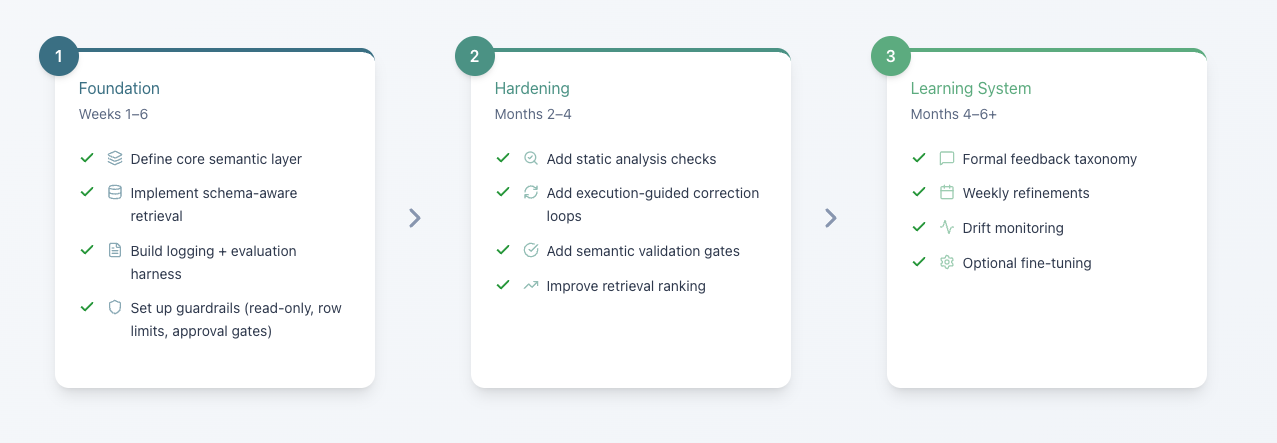

A 3-stage implementation roadmap

Stage 1: Foundation (weeks 1–6)

Goal: get to a credible Minimal Viable Product

- Define core semantic layer (top metrics/dimensions)

- Implement schema-aware retrieval (start simple, then iterate)

- Build logging + evaluation harness (golden set of queries)

- Put guardrails in place (read-only, row limits, approval gates)

Stage 2: Hardening (months 2–4)

Goal: reduce failures and prevent damage

- Add static analysis checks (invalid joins, risky patterns)

- Add execution-guided correction loops

- Add semantic validation gates for high-risk queries

- Improve retrieval ranking and synonym mapping

Stage 3: Learning system (months 4–6+)

Goal: improve with usage

- formal feedback taxonomy (error, correction, rejection)

- weekly review and prompt/retrieval refinements

- drift monitoring (accuracy trends, failure clusters)

- optional: fine-tuning for stable query families

The text-to-SQL maturity model (what “good” looks like)

Stage 1: Prompt-only prototype

- manual schema descriptions

- full human review

- low reliability

Stage 2: Semantic layer + RAG

- grounded generation

- selective review gates

- credible production MVP

Stage 3: Feedback loops + evaluation

- continuous improvement

- monitoring and drift detection

- robust production system

Stage 4: Hybrid with light fine-tuning

- targeted fine-tuning for stable patterns

- strong orchestration and evaluation

- mission-critical correctness at scale

Most real teams operate at Stage 2–3. That’s normal and sufficient for many production use cases.

Key takeaways

- Start with semantic layer + RAG. It’s the fastest route to correctness and maintainability.

- Fine-tuning is optional and conditional. Use it only when patterns are stable and you have validated examples.

- Feedback loops are non-negotiable for production. Logging alone isn’t enough, use signals to improve retrieval and definitions.

- Execution feedback is the most practical objective signal. It makes the system robust even before full semantic validation is mature.

- Measure what matters. Track semantic correctness, not just “query ran”.

- Architecture beats model choice. Strong governance with a solid baseline model beats a powerful model with weak grounding.