The Ultimate Guide to Data Modeling: Concepts, Techniques, and Best Practices

.png)

Rushing into tables without a plan is how many data projects wobble. You can ship something fast, but it won’t scale or answer tough questions when the pressure is on.

Data modeling is the plan. It turns scattered, messy inputs into information people can trust.

In the pages below, you’ll view the essentials from the core ideas that haven’t changed in decades to the ways cloud and AI help today. If you’re building your first pipeline or running a mature platform, these practices hold up where it matters: in production.

Why Data Modeling: The Case for Planning

Data modeling gives your data purpose and helps teams avoid costly surprises. Here’s why it matters:

- Builds trust: When everyone uses the same definitions and entities, marketing, product, and finance all see the same numbers: no more “which dashboard is right?” debates.

- Speeds delivery: Clearly defined relationships, keys, and constraints reduce rework and make true self-service analytics possible.

- Controls cost: Thoughtful partitioning, clustering, and access patterns cut down on full scans and wasted compute, keeping your data operations efficient.

- Adapts to change: Using versioned schemas and semantic layers lets your models evolve over time without breaking downstream processes.To know more about why data modeling and semantic layer is important.

- Boosts quality and governance: Clear constraints, data lineage, and defined ownership help catch problems early and manage access effectively.

- Ready for machine learning: If your data models are well-documented and built with reusable features, your machine learning workflows naturally become smoother and more reliable. Both your training and inference processes stay consistent when everything is well-defined and easy to reuse.

- Understanding the business problem you're attempting to solve (conceptual model) is a smart place to start. After that is evident, determine the most effective way to arrange your data to support it (logical model), and then implement it on your platform (physical model). Start with a basic model and make notes as you go along. Then improve it over time as you observe how well it works in actual situations.

From Punch Cards to AI: How Data Modeling Evolved

The way we shape data has shifted dramatically over the last fifty years.

- 1970s – 1980s: Relational models and ER diagrams took over transactional systems. The brief was simple: record accurately, retrieve quickly.

- 2000s: Analytics needed speed. Dimensional modeling, with star and snowflake schemas, made reports fast and decision‑friendly.

- 2010s: Big data arrived. Teams favored data lakes and schema‑on‑read, loading first and shaping later with flexible ELT.

- Today: Real‑time sits beside the batch. Warehouses like Snowflake and BigQuery scale with little fuss. Semantic layers align business definitions, while AI suggests keys, relationships, and optimizations.

Learn more about How AI is Integerated with Data Modelling

Performance Optimization and Scalability: Data Modeling Best Practices for High-Growth Systems

Normalization vs. Denormalization: Finding the Balance

- OLTP systems typically lean on normalization for integrity and efficient updates.

- OLAP systems often denormalize to cut down on joins and keep reads fast.

Most modern stacks mix the two: normalized for operations, carefully denormalized for analytics. Let your access patterns and latency goals decide the split and revisit the decision as usage changes.

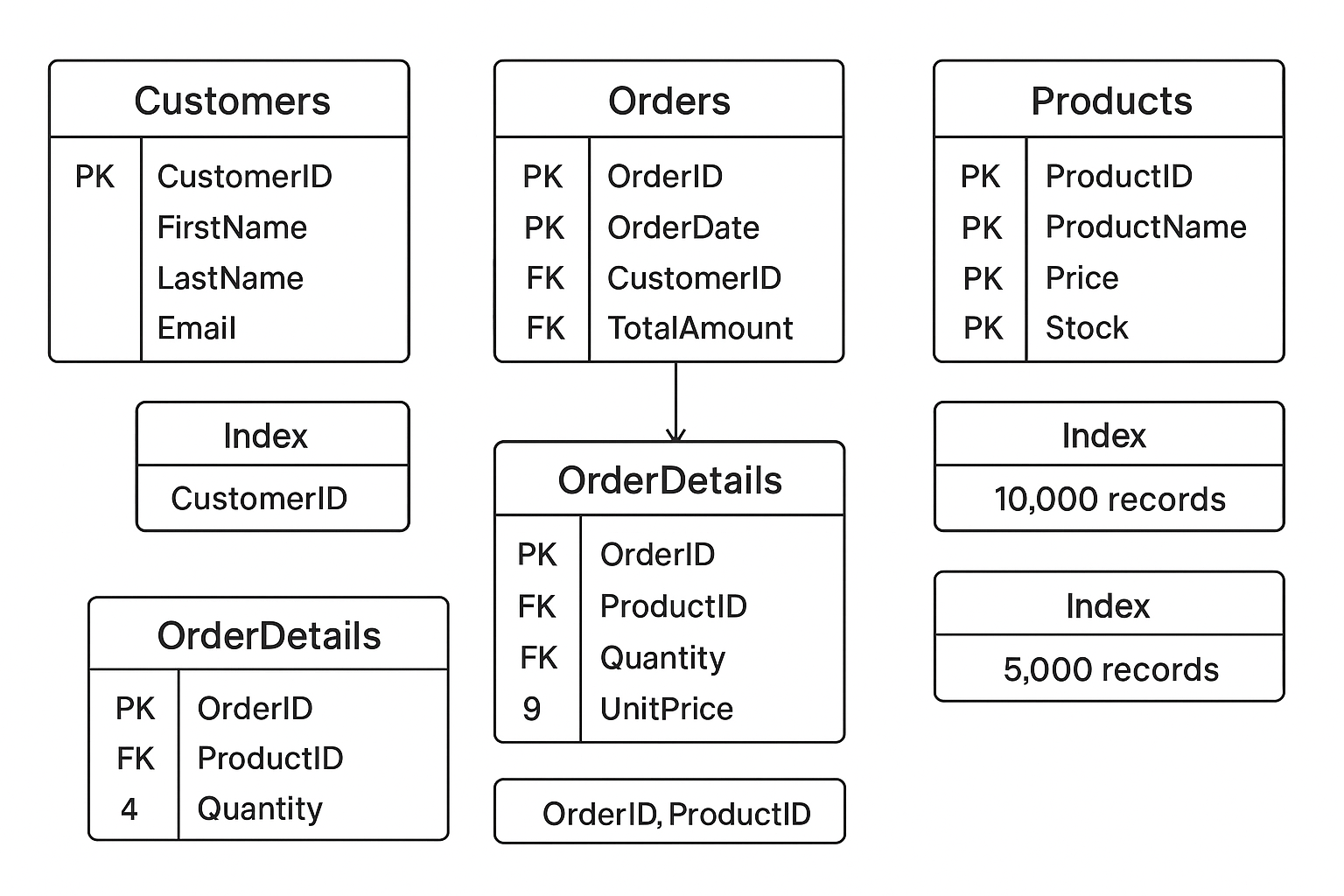

Partitioning and Indexing for Cloud Warehouses

Cloud engines can swallow huge datasets, but layout matters.

- Partition large tables (e.g., by date or region) to limit what scans.

- Cluster or index columns you filter on repeatedly.

- Indexes make reading data faster but writing data slower. Choose whether to use them based on your read or write ratio, and review your decision as workloads change.

Surrogate Keys and Constraints in Distributed Systems

Natural keys like email addresses change and collide. Surrogate keys (system‑generated IDs) – stay stable, make joins cheaper, and travel safely across services. Pair them with foreign keys and you protect integrity even as your footprint grows.

Versioning Your Schemas

Treat schemas like code. Version them, document changes, support rollbacks, and keep a history. Tools such as dbt make this routine and help you avoid quiet “schema drift.”

AI/ML-Integrated Data Modeling Best Practices

As ML moves to the center, models must deliver clean, consistent, ready‑to‑use data.

- Feature alignment: expose reusable, stable features in your logical models.

- Schema versioning: keep training and inference compatible across changes.

- Data governance: preserve entity integrity in both training and production.

- AI assistance: let tools suggest relationships or spot anomalies – humans still approve.

- Balanced design: normalize where accuracy matters; denormalize where low‑latency scoring wins.

- Rich metadata: document entities, attributes, and lineage so results are repeatable.

Handled well, these habits reduce drift and make ML systems easier to scale and trust.

Performance and Scalability: Turning Design Into Reality

A tidy diagram is a start; good response times are the finish.

- Normalize to remove redundancy and simplify updates – watch the cost of cross‑table joins.

- Denormalize where critical queries are too slow or too expensive.

- Measure, tune, and repeat – data volume and usage change the game over time.

Where the Concepts Show Up in Practice

E‑commerce: Understanding Customer Behavior

Retailers tend to use dimensional models for sales and inventory. A star schema that ties a sales fact table to product, customer, and time dimensions powers real‑time tiles like top sellers and hourly revenue. A small graph layer can handle recommendations (“people who bought this also bought…”), improving personalization and average order value.

Financial Services: Compliance and Risk

Banks rely on normalized models for audit trails and regulator‑ready reports – every transaction must be precise and traceable. For risk analytics, dimensional marts aggregate exposure by region, portfolio, and asset class so outputs are fast and consistent.

SaaS Products: From Signup to Churn

SaaS teams blend patterns: relational for billing, event streams for product usage, and document stores for tickets and feedback. Together they form one view of the customer journey – from activation to expansion to churn.

IoT and Real‑Time Systems: Sensor Data

When millions of devices start sending out events, relying solely on batch processing just won’t do the trick. That’s where event-oriented models come into play for handling data ingestion. On the other hand, time-series databases allow for real-time analysis and alerting while the data is still fresh.

The Three Pillars of Data Modeling Concepts: Conceptual, Logical, Physical

The basics haven’t disappeared – they scaled up. Think house building: agree on rooms, sketch the floor plan, then pick materials. Models follow the same flow.

- Conceptual Model: What the Business Actually Cares About

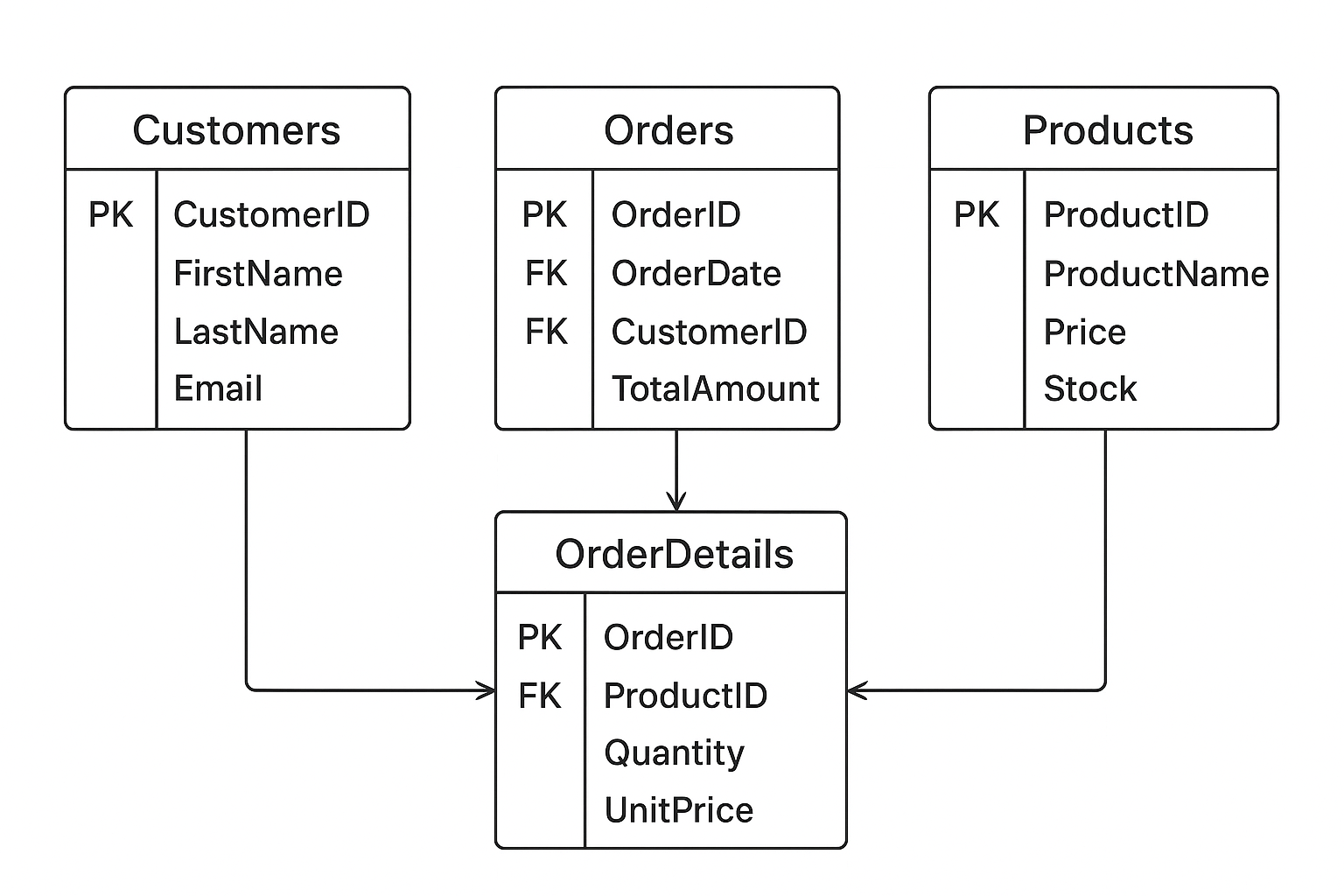

Capture the business view without technical noise: high‑level entities and relationships. Customers place orders, orders contain products, users belong to organizations. It’s the handshake between domain experts and engineers. Skip it and you risk a system that’s correct on paper but misaligned with how the business works.

- Logical Model: Adding Structure and Rules

Define tables (or collections), attributes, keys, and constraints, still independent of a specific database. Set primary and foreign keys plus the rules that keep data clean. Example: Customer has Customer_ID, Name, Email; Orders has Order_ID, Customer_ID, Date; the relationship is explicit and enforceable. A clear logical model prevents confusion and keeps teams on the same definitions.

- Physical Model: Making It Real

Turn the design into an actual schema. Choose types, create indexes, plan partitions, and tune for performance. This is where VARCHAR(100) becomes real, Email might be UNIQUE, Customer_ID gets an index, and Orders partitions by date.

Here’s a simple SQL illustration:

CREATE TABLE Customer (

Customer_ID INT PRIMARY KEY,

Name VARCHAR(100),

Email VARCHAR(255) UNIQUE

);

CREATE TABLE Orders (

Order_ID INT PRIMARY KEY,

Order_Date DATE,

Customer_ID INT,

FOREIGN KEY (Customer_ID) REFERENCES Customer(Customer_ID)

);

Each layer builds on the last. Skipping the concept and your logic won’t reflect the business. Rushing the logical and the physical models becomes expensive to maintain. When the three aspects are right, you create a solution that is technically robust and genuinely valuable in practice.

Four Data Modeling Techniques That Solve Real Problems

There’s no single hammer for every job. Use the pattern that fits.

- Dimensional modeling (star/snowflake) for fast dashboards and repeatable analytics. Sales facts link to product, customer, and date; the predictability keeps queries snappy. Trade‑off: less flexible for open‑ended exploration.

- Relational/normalized modeling for transactional integrity and clean updates.

- Document modeling for nested, evolving records that don’t fit rows nicely.

- Graph modeling for rich relationships and path queries (recommendations, fraud, networks).

The Modern Landscape of Data Modeling Tools: What’s New, What’s Next

Data modeling tools have evolved significantly:

- Classic Tools: ERWin, Toad, ER/Studio are ideal for traditional relational and enterprise systems.

- Modern Tools: dbt, LookML, SQLMesh focus on transformations, semantic layers, and analytics-ready models.

- Cloud & AI Tools: Databricks Unity Catalog, Snowflake Semantic Layer manage governance, lineage, and access control.

- Governance and access: Platforms like Databricks Unity Catalog and Snowflake’s Semantic Layer bring lineage, permissions, and shared definitions to the center.

- Pick tools that match the team and the job like transactional, analytical, or streaming.

Selecting the right data modeling tools depends on the team, platform, and type of modeling (transactional, analytical, or streaming).

Implementation Roadmap and Emerging Trends in Data Modeling

- Clarify business requirements: entities, attributes, and relationships.

- Build conceptual and logical models to map those relationships.

- Choose techniques: dimensional, graph, document, or a hybrid.

- Optimize: normalize where needed; denormalize for read speed; partition big tables.

- Select supporting tools that fit your workflow and platform.

- Validate with production‑like data and iterate on what you learn.

Emerging trends:

- Trends to Watch

- AI‑assisted schema generation and tuning.

- Unified semantic layers that keep business and analytics views in sync.

- Event‑driven pipelines and models.

- Self‑service modeling for analysts and business teams.

Timeless Principles:

- Understand the business context.

- Design with clear logic and rules.

- Optimize for performance as data grows.

- Evolve the model with the system.

Tools and AI will keep changing how we build, but the aim is steady: turn raw data into dependable intelligence. Invest the time to model well and your dashboards, decisions, and predictions will stand on firm ground. The bridge between data and action has a name – good data modeling.

FAQ: Data Modeling

- What is data modeling?

Think of it as the blueprint for your data. It turns messy, real‑world concepts (customers, orders, products) into a clean structure that’s easy to query and hard to break. Done well, it makes numbers consistent, reports trustworthy, and changes less painful.

- How is data modeling different from schema design?

Schema design is the last mile – the specific tables, columns, and types in a given database. Data modeling is the full journey: agree on the business concepts (conceptual), define keys and relationships (logical), then implement and tune (physical). The model ensures the schema matches how the business actually works.

- When should I denormalize?

Denormalization is appropriate when read operations dominate and complex joins are slowing down performance. If your dashboards are lagging or queries require multiple heavy joins, denormalizing can improve speed and efficiency. It’s important, however, to maintain clear lineage back to the source data to ensure accuracy and avoid silent data drift.

- Star schema or snowflake schema?

Start with a star schema – it’s simpler to query and usually fast enough. Move to snowflake when you have very large or shared dimensions and need more organization or reuse across multiple fact tables.

Glossary (Quick Reference)

- Fact table: The table that stores the “things that happened” with numbers attached – like each sale, click, or payment and its measures (revenue, quantity, duration).

- Dimension: The who/what/when/where that describes your facts – customers, products, dates, regions – used to slice and filter reports.

- Surrogate key: A stable, system - generated ID (like customer_key) that doesn’t change, making joins reliable even if natural identifiers (like email) do change.

- Semantic layer: A business‑friendly map of your data that standardizes metrics and definitions, so “active user” or “net revenue” means the same thing everywhere.

- Star schema: A dimensional modeling approach where a central fact table is directly connected to multiple dimension tables. This design enables efficient, clear, and easily interpretable querying.

- Snowflake schema: A more advanced dimensional model in which dimension tables are further normalized into sub-tables, enhancing structure and ensuring consistency across large datasets.

- Normalization: The practice of organizing data to reduce redundancy, maintain consistency, and safeguard overall data integrity.

- Denormalization: The intentional introduction of redundancy or the merging of tables to simplify queries and improve read performance.

.png)

.png)